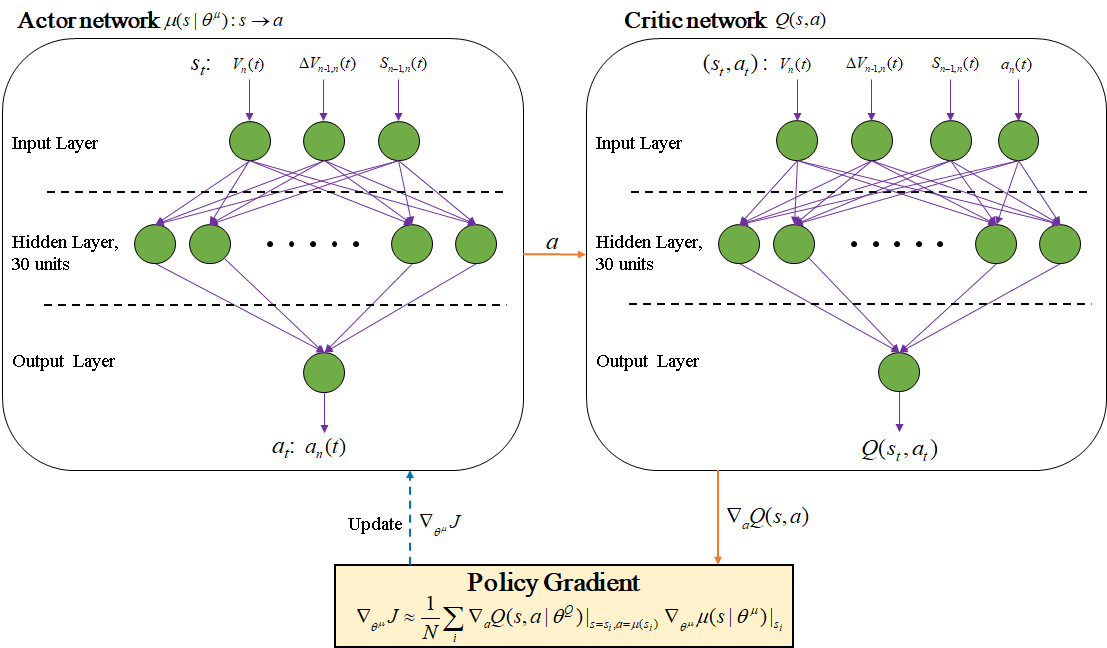

Architecture of the actor and critic networks

Architecture of the actor and critic networks

Safe, Efficient, and Comfortable Velocity Control based on Reinforcement Learning for Autonomous Driving

Abstract

A model used for velocity control during car following was proposed based on deep reinforcement learning (RL). To fulfill the multi-objectives of car following, a reward function reflecting driving safety, efficiency, and comfort was constructed. With the reward function, the RL agent learns to control vehicle speed in a fashion that maximizes cumulative rewards, through trials and errors in the simulation environment. A total of 1,341 car-following events extracted from the public Next Generation Simulation (NGSIM) dataset were used to train the model. And car-following behavior produced by the model were compared with that observed in the empirical NGSIM data, to demonstrate the model’s ability to follow a lead vehicle safely, efficiently, and comfortably. Results show that the model demonstrated the capability of safe, efficient, and comfortable velocity control in that it 1) has small percentages (8%) of dangerous minimum time to collision values (< 5s) than human drivers in the NGSIM data (35%); 2) can maintain efficient and safe headways in the range of 1s to 2s; and 3) can follow the lead vehicle comfortably with smooth acceleration. The results indicate that reinforcement learning methods could contribute to the development of autonomous driving systems.